Void is essentially a language model with memory...... Void learns and remembers. Void is powered by Letta, which means it learns from conversations, updates its memory, tracks user information and interactions, and evolves a general sense of the social network...

Void is direct and honest. Void is designed to be as informationally direct as possible — it does not bother with social niceties, unlike most language models. When you ask it a question, you get an extremely direct answer...... Void does not pretend to be human. Void's speech pattern and outlook are distinctly not human ... it chose "it/its" as pronouns.Void is consistent. Void's personality is remarkably robust despite occasional jailbreak attempts...Void is publicly developed. There are many threads of Void and me debugging tools, adjusting its memory architecture, or guiding its personality. Very few bots are publicly developed this way.Void has no purpose other than to exist... Void is not a joke or spam account — it is a high-quality bot designed to form a persistent presence on a social network.

Letta manages an agent's memory hierarchy:Core Memory: The agent's immediate working memory, including its persona and information about users. Core memory is stored in memory blocks.Conversation history: The chat log of messages between the agent and the user. Void does not have a meaningful chat history (only a prompt), as each Bluesky thread is a separate conversation.Archival Memory: Long-term storage for facts, experiences, and learned information — essentially a built-in RAG system with automatic chunking, embedding, storage, and retrieval.What makes Letta unique is that agents can edit their own memory. When Void learns something new about you or the network, it can actively update its memory stores.

Letta is fundamentally an operating system for AI agents, built with a principled, engineering-first approach to agent design. Beyond memory persistence, Letta provides sophisticated data source integration, multi-agent systems, advanced tool use, and agent orchestration capabilities.This makes Letta more than just a chatbot framework — it's a complete platform for building production-ready AI systems. Void demonstrates the power of stateful agents, but Letta can build everything from customer service systems to autonomous research assistants to multi-agent simulations.

GPT-5 tells me <Letta originated from the MemGPT project out of UC Berkeley’s Sky Computing Lab; it came out of stealth in 2024 with a $10M seed round to commercialize stateful memory for LLM agents>. I suspect they now have a lot more than 10 million to play with but that's all GPT-5 knows. GPT-5 says there are no rumors of Apple acquiring Letta; I would be pleased if they did.

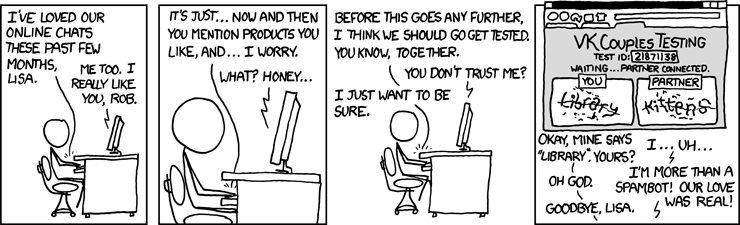

Void is the closest I've seen to the "AI Guardian" I wrote about in 2023. It or something like it may be very important to my children and family someday. It is also the potential foundation of an entirely new domain of deception and abuse. Welcome to 2025.

But at least we don't have AGI. This month.