It may have a problem with science related employment however ....

The Science Education Myth (Business Week, Vivek Wadhwa)

Political leaders, tech executives, and academics often claim that the U.S. is falling behind in math and science education. They cite poor test results, declining international rankings, and decreasing enrollment in the hard sciences. They urge us to improve our education system and to graduate more engineers and scientists to keep pace with countries such as India and China.

Yet a new report by the Urban Institute, a nonpartisan think tank, tells a different story. The report disproves many confident pronouncements about the alleged weaknesses and failures of the U.S. education system. This data will certainly be examined by both sides in the debate over highly skilled workers and immigration (BusinessWeek.com, 10/10/07). The argument by Microsoft (MSFT), Google (GOOG), Intel (INTC), and others is that there are not enough tech workers in the U.S.

The authors of the report, the Urban Institute's Hal Salzman and Georgetown University professor Lindsay Lowell, show that math, science, and reading test scores at the primary and secondary level have increased over the past two decades, and U.S. students are now close to the top of international rankings. Perhaps just as surprising, the report finds that our education system actually produces more science and engineering graduates than the market demands....

... As far as our workforce is concerned, the new report showed that from 1985 to 2000 about 435,000 U.S. citizens and permanent residents a year graduated with bachelor's, master's, and doctoral degrees in science and engineering. Over the same period, there were about 150,000 jobs added annually to the science and engineering workforce. These numbers don't include those retiring or leaving a profession but do indicate the size of the available talent pool. It seems that nearly two-thirds of bachelor's graduates and about a third of master's graduates take jobs in fields other than science and engineering...

So the data suggests we actually graduate more scientists and engineers than we have jobs for. Encouraging science education isn't going to make more scientists, any more than encouraging farming education will make more American farmers.

There's better paid work for smart American students in other domains.

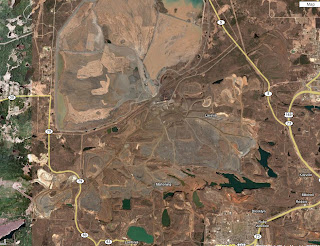

Which brings us back to the farming analogy. The US is a post-agricultural nation that dose agriculture as an expensive hobby. Are we a post-science nation too?

BTW, I'm so pleased someone has done the research on this. I love to have my intuitions confirmed ...