TL;DR: It's not that ChatGPT is miraculous, it's that cognitive science research suggests human cognition is also not miraculous.

"Those early airplanes were nothing compared to our pigeon-powered flight technology!"

https://chat.openai.com/chat - "Write a funny but profound sentence about what pigeons thought of early airplanes"

Relax | Be Afraid |

ChatGPT is just a fancy autocomplete. | Much of human language generation may be a fancy autocomplete. |

ChatGPT confabulates. | Humans with cognitive disabilities routinely confabulate and under enough stress most humans will confabulate. |

ChatGPT can’t do arithmetic. | IF a monitoring system can detect a question involves arithmetic or mathematics it can invoke a math system*. UPDATE: 2 hours after writing this I read that this has been done. |

ChatGPT’s knowledge base is faulty. | ChatGPT’s knowledge base is vastly larger than that of most humans and it will quickly improve. |

ChatGPT doesn’t have explicit goals other than a design goal to emulate human interaction. | Other goals can be implemented. |

We don’t know how to emulate the integration layer humans use to coordinate input from disparate neural networks and negotiate conflicts. | *I don't know the status of such an integration layer. It may already have been built. If not it may take years or decades -- but probably not many decades. |

We can’t even get AI to drive a car, so we shouldn’t worry about this. | It’s likely that driving a car basically requires near-human cognitive abilities. The car test isn’t reassuring. |

ChatGPT isn’t conscious. | Are you conscious? Tell me what consciousness is. |

ChatGPT doesn’t have a soul. | Show me your soul. |

Relax - I'm bad at predictions. In 1945 I would have said it was impossible, barring celestial intervention, for humanity to go 75 years without nuclear war.

See also:

- All posts tagged as skynet

- Scott Aaronson and the case against strong AI (2008). At that time Aaronson felt a sentient AI was sometime after 2100. Fifteen years later (Jan 2023) Scott is working for OpenAI (ChatGPT). Emphases mine: "I’m now working at one of the world’s leading AI companies ... that company has already created GPT, an AI with a good fraction of the fantastical verbal abilities shown by M3GAN in the movie ... that AI will gain many of the remaining abilities in years rather than decades, and .. my job this year—supposedly!—is to think about how to prevent this sort of AI from wreaking havoc on the world."

- Imagining the Singularity - in 1965 (2009 post. Mathematician I.J. Good warned of an "intelligence explosion" in 1965. "Irving John ("I.J."; "Jack") Good (9 December 1916 – 5 April 2009)[1][2] was a British statistician who worked as a cryptologist at Bletchley Park."

- The Thoughtful Slime Mold (2008). We don't fly like bird's fly.

- Fermi Paradox resolutions (2000)

- Against superhuman AI: in 2019 I felt reassured.

- Mass disability (2012) - what happens as more work is done best by non-humans. This post mentions Clark Goble, an app.net conservative I miss quite often. He died young.

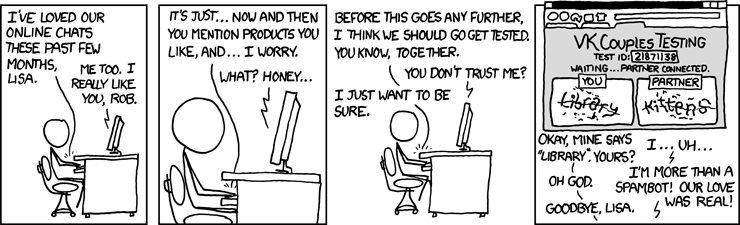

- Phishing with the post-Turing avatar (2010). I was thinking 2050 but now 2025 is more likely.

- Rat brain flies plane (2004). I've often wondered what happened to that work.

- Cat brain simulator (2009). "I used to say that the day we had a computer roughly as smart as a hamster would be a good day to take the family on the holiday you've always dreamed of."

- Slouching towards Skynet (2007). Theories on the evolution of cognition often involve aspects of deception including detection and deceit.

- IEEE Singularity Issue (2008). Widespread mockery of the Singularity idea followed.

- Bill Joy - Why the Future Doesn't Need Us (2000). See also Wikipedia summary. I'd love to see him revisit this essay but, again, he was widely mocked.

- Google AI in 2030? (2007) A 2007 prediction by Peter Norvig that we'd have strong AI around 2030. That ... is looking possible.

- Google's IQ boost (2009) Not directly related to this topic but reassurance that I'm bad at prediction. Google went to shit after 2009.

- Skynet cometh (2009). Humor.

- Personal note - in 1979 or so John Hopfield excitedly described his work in neural networks to me. My memory is poor but I think we were outdoors at the Caltech campus. I have no recollection of why we were speaking, maybe I'd attended a talk of his. A few weeks later I incorporated his explanations into a Caltech class I taught to local high school students on Saturday mornings. Hopfield would be about 90 if he's still alive. If he's avoided dementia it would be interesting to ask him what he thinks.